|

In addition to the “DSA transparency reports” on its content moderation about which I’ve written previously, X, like other major online platforms, also submits “systematic risk assessment” (SRA) reports to the EU to comply with its obligations under the Digital Services Act. These risk assessments are supposed to help the platform precisely in designing its “content moderation,” in order to mitigate “systemic risks” arising from the use of the platform: for instance, due to the spread of “harmful misinformation”, as X’s latest report puts it (p. 53). That report can be found at X’s “Transparency Center” here. The above excerpt comes from it. As you can see, the report is redacted!

These are not the only redactions. X’s 2025 SRA report is in fact redacted throughout and very heavily redacted in parts. The above excerpt comes from p. 12. Readers will be reassured by knowing that X “continued to proactively engage and exchange information with the European Commission, the European External Action Service (EEAS), the European Parliament, and Member State’s key authorities…”. Thank goodness that the “Twitter Files” exposed some occasional contacts with the US government and that, by Executive Order or something like that, this shall never happen again!

But that just by the by on the unredacted bits…

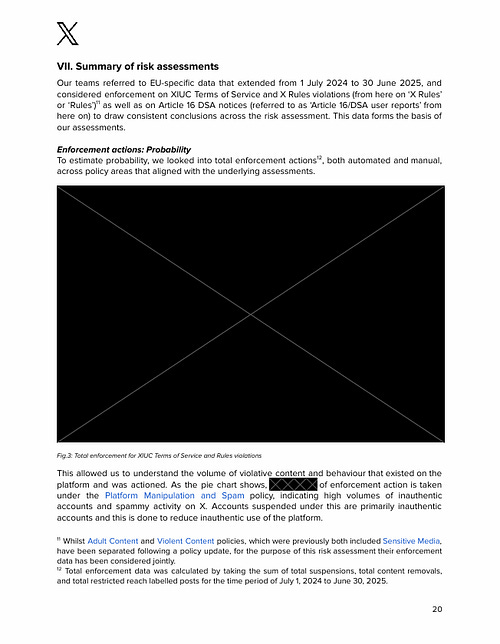

Here, for instance, is what p. 20 looks like.

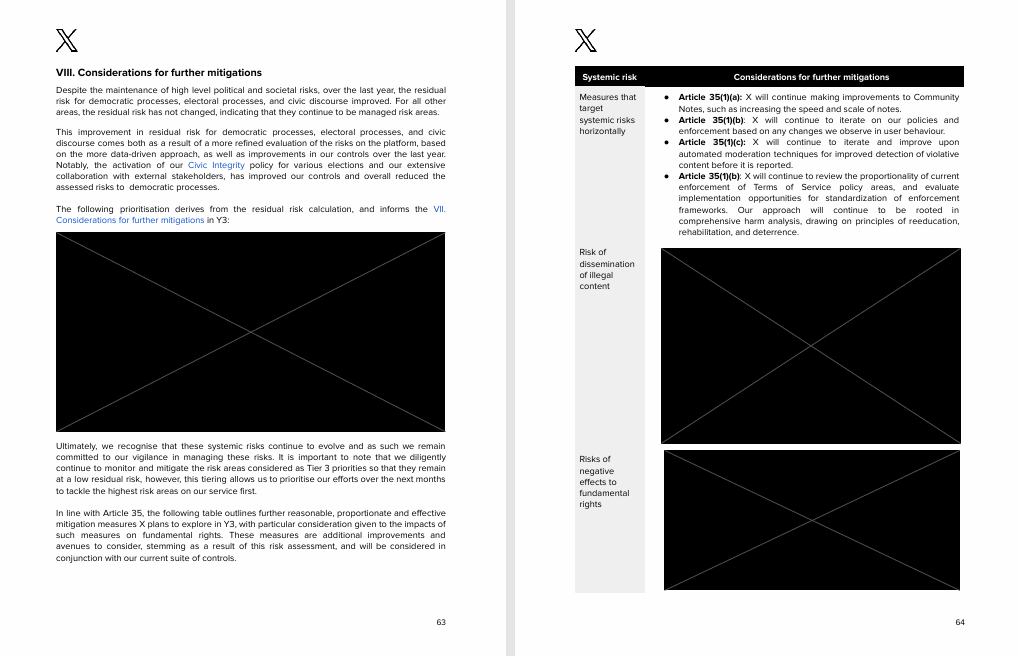

And here are pp. 63-64.

In particular, it is evident from a perusal of the document that all quantitative data — for instance, on reported content and X “enforcement action” — has been hidden. See, for instance, the below passage on misinformation as “public health risk.”

The redacting of the data on X “enforcement actions” — i.e. content removals, withholding of content in certain jurisdictions, and restriction of visibility — means that I cannot do the kind of analysis I have conducted in the past and that shows that “visibility-filtering” is precisely the main form taken by censorship on X.

My analysis was based on X’s 2024 “DSA Transparency Report.” That report showed that X took “enforcement action” on an astounding 226,350 of 238,108 items reported to it by EU-based “individuals and entities” in the reporting period. Since 40,331 items were deleted and access to 62,802 items was withheld in the EU, this means that 123,217 items, over half, “merely” had their visibility restricted. But X’s subsequent “DSA Transparency Reports” also hide the aggregate data required to infer the extent of “visibility-filtering”: not by redacting the aggregate figure but simply by not mentioning it at all! Perhaps I struck a chord…

X’s practice of redacting its “systematic risk assessment” (SRA) reports is not typical, by the way. A full archive of such reports is available here. Based on some quick sampling, the only other company that I have found redacting its reports is TikTok. The Facebook, LinkedIn and Google/YouTube reports that I have consulted contain no redactions. Readers are welcome to look for themselves, and I would, of course, welcome feedback.

The publicly available versions of X’s 2023 and 2024 SRA reports are also redacted. Earlier this year, the watchdog organization Follow The Money asked the European Commission for access to the unredacted versions. On the organization’s own account (registration required), the Commission’s response was not only to deny the request, but also to change its internal rules to create a presumption of confidentiality for DSA-related documents. So much for “transparency” — both that of X and that of the European Commission.

Robert Kogon's Newsletter is free today. But if you enjoyed this post, you can tell Robert Kogon's Newsletter that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments.